- Published on

Performance issue in Frontend development

- Authors

- Name

- Yinhuan Yuan

Introduction

This blog post summarizes Performance issue in Frontend development, which is originally published in Chinese.

Original post: https://mp.weixin.qq.com/s/HezWSAsNFWE3UyO6fGjgHQ

- I. Overview

- II. Common Performance Optimization Strategies

- 2.0 Prerequisite Knowledge Points

- 2.1 Resource Request Phase

- 2.2 Page Rendering Phase

- III. Common Analysis Tools

- IV. Conclusion

- Reference Links:

Original by Silicon Step, Alibaba Cloud Developer, September 24, 2024, 20:30 Alibaba Sister's Guide The author has recently been trying to optimize the performance of the platform they are responsible for. This article summarizes some common strategies for front-end performance optimization. The journey of seeking enlightenment is more important than reaching the Spirit Mountain.

I. Overview

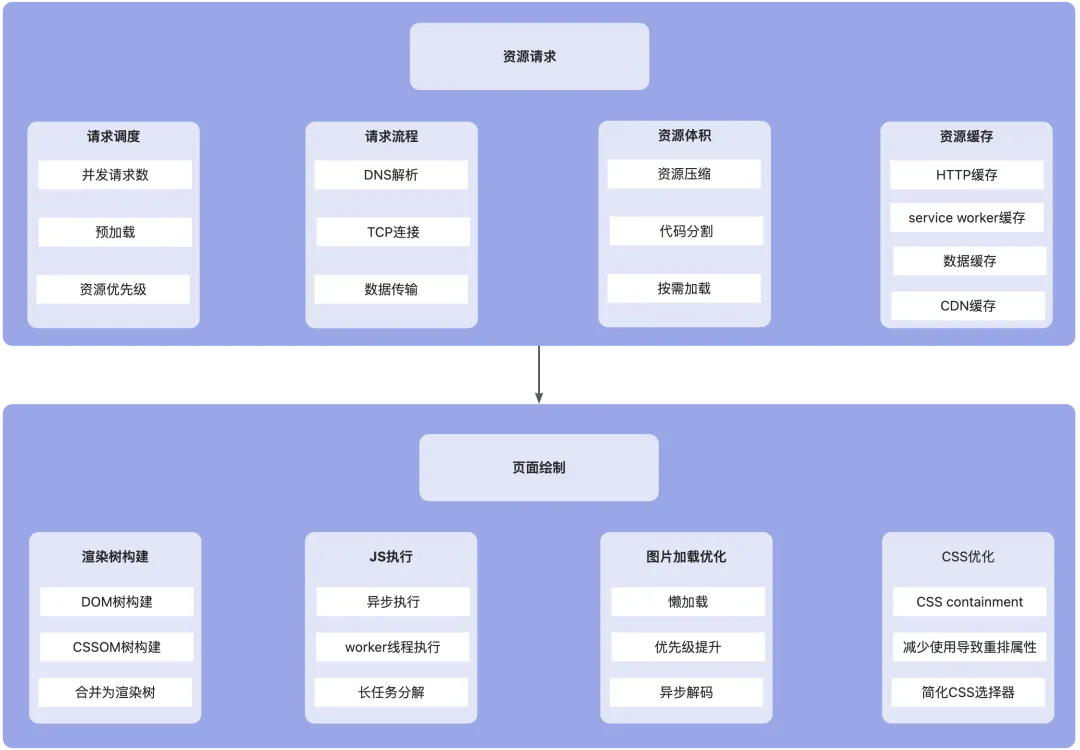

Recently, I've been trying to optimize the performance of the platform I'm responsible for and have summarized some common strategies for front-end performance optimization. The general approach to performance optimization is: first analyze the current performance status to define the problems, then provide technical solutions based on performance optimization strategies and evaluate them, and finally implement and validate the technical solutions. To do a good job, one must first sharpen their tools. Let's follow this article to understand the common performance optimization strategies. Without further ado, let's start with the outline. For a clear, large image, see the link or click "Read the original text" at the end: https://developer.aliyun.com/article/1610662 [Image]

II. Common Performance Optimization Strategies

2.0 Prerequisite Knowledge Points

2.0.1 User Page Access CRP (What happens when a user enters a URL)

There's a classic question in front-end interviews: What happens when a user enters a URL? This question can effectively test the breadth and even depth of a candidate's knowledge. Generally speaking, web-side performance optimization is essentially about shortening the time interval between "user entering a URL" and "user being able to perform expected behavior on the webpage". Therefore, web performance optimization is generally included as part of user experience optimization. In some C-end scenarios, the time it takes for users to perform expected behaviors has a clear positive correlation with the final revenue generated by the relevant page. Some related cases can be found online. So, before performing performance optimization, we need to understand what we are optimizing. This brings us to the user page access CRP (Critical Rendering Path). Simply put, after a user enters a URL, they generally go through the following steps:

- The browser performs DNS resolution on the domain name of the input URL to obtain the IP address;

- Establish a connection with the target server based on the IP address (http1.1 / http2 / http3);

- Send an HTTP request to the server based on the established connection;

- The target server processes the request and returns an HTTP response;

- The browser receives the server's response (we only consider the case of requesting HTML first) and returns HTML;

- The browser parses the HTML, builds the DOM tree based on HTML and JavaScript scripts, builds the CSSOM tree based on CSS, and synthesizes the render tree;

- Calculate node layout information combining the render tree and screen resolution and other related information;

- The browser renders the page based on the render tree and layout information;

- Finally, different layers are merged into the final image, appearing on the page;

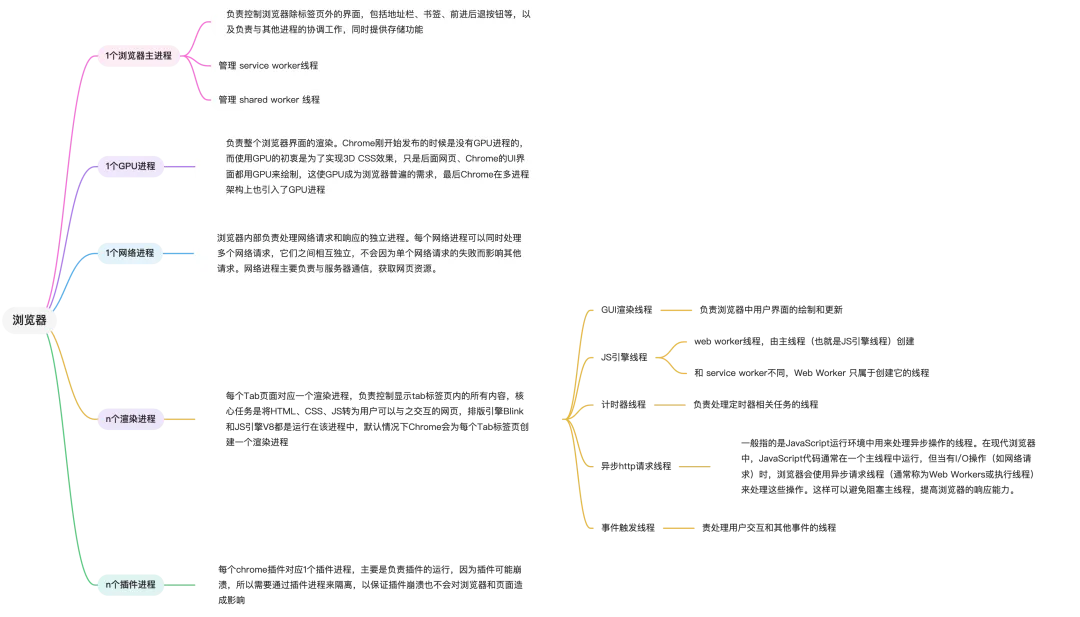

2.0.2 Browser's Process and Thread Mechanism

2.0.3 Page Repainting & Reflow

Repainting refers to when the appearance of an element (such as color, background, shadow, etc.) changes, the browser needs to repaint these elements, but does not need to recalculate their layout. Repainting does not affect the structure of the document, it only updates the visual presentation of the element. For example, changing the background color of an element, modifying the color or font style of text. Reflow, also known as layout, refers to when the geometric properties of an element (such as width, height, position, etc.) change, the browser needs to recalculate the layout of the element. This usually leads to the recalculation of the entire document's layout, so reflow is much more expensive than repainting. For example, changing the size of an element (such as width or height), adding or removing DOM elements, changing the margin, padding or border of an element. Repainting is relatively lightweight and usually doesn't significantly affect performance. Reflow, on the other hand, can cause performance issues, especially when triggered frequently, as it affects the layout of the entire document and may lead to multiple reflows and repaints.

2.1 Resource Request Phase

2.0.0 Optimization Approach

First, we define several related factors of "resource request time": number of concurrent requests, single request time, and resource request order. Among these, the resource request order cannot directly shorten the "resource request time", but it can make "critical resources" complete the request first, thereby allowing page rendering to start first.

2.1.1 Increase the Number of Concurrent Requests

By default, browsers limit the number of concurrent requests to the same domain, usually to 6-8. This means that the browser can send at most 6-8 requests simultaneously to the server under the same domain name. Requests exceeding this number will be queued and wait. We can increase the number of concurrent requests through the following methods.

2.1.1.1 Use Multiple Domain Names (also known as Domain Sharding, Domain Load Balancing)

Domain sharding is an optimization technique aimed at improving webpage loading speed and resource request concurrency. Its basic principle is to distribute the static resources of the website (such as images, CSS, JavaScript files, etc.) across multiple different domain names or subdomains, to bypass the browser's limit on concurrent requests to the same domain name.

- Use multiple subdomains

- For example, if your main domain is example.com, you can use static1.example.com, static2.example.com and other subdomains to host static resources.

- Use different top-level domains

- Resources can be distributed to different top-level domains, such as example1.com, example2.com, etc.

- CDN support

- Many Content Delivery Networks (CDNs) allow you to use multiple domain names to distribute content.

2.1.1.2 Upgrade to HTTP/2

HTTP/2 is the second major version of the HTTP protocol, aimed at improving network performance and efficiency. In HTTP/1.x, each request typically requires establishing a new TCP connection (HTTP/1.0), or processing requests sequentially on the same connection (HTTP/1.1, Keep-Alive), which leads to the overhead of establishing and closing connections. HTTP/2 allows multiple requests and responses to be processed concurrently on the same connection (multiplexing feature), reducing the number of connections and latency. Below, we'll introduce HTTP/2's multiplexing and other features.

- Multiplexing

- HTTP/2 allows multiple requests and responses to be sent concurrently on a single connection, without waiting for the previous request to complete. This reduces latency and improves resource utilization.

- Header Compression

- HTTP/2 uses the HPACK algorithm to compress request and response headers, reducing the amount of data transmitted, especially in cases where request headers are large.

- Server Push

- The server can proactively push resources to the client without the client requesting them. This means the server can send resources needed for a page (such as CSS and JavaScript files) in advance when the client requests that page.

- Binary Framing

- HTTP/2 uses a binary format instead of a text format, making parsing more efficient and reducing the possibility of errors.

- Priority and Flow Control

- HTTP/2 allows clients to set priorities for requests, and servers can optimize the order of sending resources based on these priorities. Additionally, the flow control mechanism can prevent a single stream from occupying too much bandwidth.

2.1.1.3 Upgrade to HTTP/3

HTTP/3 is the third major version of the Hypertext Transfer Protocol, built on the QUIC (Quick UDP Internet Connections) protocol. QUIC was originally designed by Google to reduce latency and improve Web performance. HTTP/3 addresses some issues present in HTTP/2.

- Reduced Connection Establishment Time

- HTTP/2, based on TCP and TLS, requires multiple round-trip times (RTTs) to complete the handshake. HTTP/3 uses the QUIC protocol, which combines encryption and transport into a single process, allowing connection establishment to be completed in one RTT, and in the best case, can even resume sessions in zero RTT.

- Multiplexing Without Head-of-Line Blocking

- Although HTTP/2 supports multiplexing, the head-of-line blocking problem still exists at the TCP layer. HTTP/3, through QUIC's improved multiplexing capability, solves TCP's head-of-line blocking problem as it's based on UDP datagrams, where independent streams can continue to transmit even when packet loss occurs in other streams.

- Fast Packet Loss Recovery and Congestion Control

- QUIC implements a faster packet loss recovery mechanism. TCP needs to wait for a period of time to confirm packet loss, while QUIC can quickly respond to packet loss situations and adjust congestion control strategies accordingly using a more fine-grained acknowledgment mechanism.

- Connection Migration

- QUIC supports connection migration, allowing clients to maintain existing connection states when network environments change (such as switching from Wi-Fi to mobile networks). In HTTP/2, this situation would typically lead to connection interruption and the need for re-establishing connections.

2.1.2 Shorten Single Request Time

Let's define a rather crude formula for calculating "single request time": "Single request time" = "Target resource size" / "User network speed". Among these, it's difficult for us as platform providers to improve "user network speed", so we try to reduce the "target resource size" as much as possible. For the front-end, "target resource size" is approximately equal to "front-end build product size". There are three common approaches here: one is to reduce the build product size; two is to compress during network transmission; three is to enable caching to avoid requests altogether.

2.1.2.1 Reduce Build Product Size

This step is generally done in combination with specific front-end engineering. The front-end engineering project I'm involved in developing uses koi4 (based on umi4) + webpack, so it's introduced based on the koi4 and umi system. Compared with other systems, the principles are the same, but there may be differences in specific engineering implementation schemes.

Common Public Dependencies UMD + Externals

- Our commonly used basic dependencies such as react/react-dom/antd/lodash/moment, etc., generally do not change. We load and use them through the UMD method combined with externals, allowing them to be cached locally for a long time. Each application release will not cause these fixed caches to become invalid. This approach can effectively improve the development experience for projects with rich text/chart-type large dependencies.

- The first thing to note about this transformation is that in a micro-frontend architecture, the versions of basic dependencies used by different sub-applications may not be so unified. This transformation brings the most benefit to projects with unified engineering dependency systems. Applications that can't upgrade some dependencies can be left out for now, improving ROI.

- The second thing to note is that there are many writings like antd/es/Button in third-party dependency code. If you only configure one external for antd at this time, you'll find that antd is still packaged into the product. You can use webpack plugins and other methods to externalize all component es reference methods to ensure that there is no extra antd in the product. Our team has built this feature into koi4. The same applies to lodash and others.

- There's also the module federation approach for code sharing, which won't be elaborated on here.

Appropriate Code Splitting Strategy (dynamicImport)

- Umi3 has an API called dynamicImport. In umi4, this feature is enabled by default, which will split packages by page and load on demand. If this feature is not enabled, all our pages will be packed into the same bundle (umi.js), causing the first screen to load terribly slow. The most exaggerated project I've taken over had a umi.js size of 6MB+ after compression. After simply changing some configurations: (umd + externals + dynamicImport + runtimeImport + treeshaking), the size after compression was reduced to 834KB.

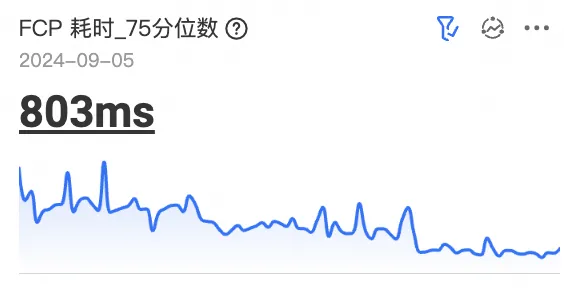

- In the micro-frontend architecture, the size of umi.js not only affects network loading but also affects qiankun's parsing speed of umi.js under the micro-frontend architecture, thereby affecting various performance indicators. We recently optimized the entry file size of umi.js, with an overall 350KB size reduction in the user access link, making FCP go from 1000ms -> 750ms, and other indicators like LDP/LCP/MCP, which also need to experience FCP in advance, have similar effect improvements.

Enable Tree Shaking

- Tree shaking is an optimization technique mainly used in the build process of JavaScript applications, especially when using modular systems (such as ES6 modules). Its main function is to eliminate unused code, thereby reducing the final generated file size, improving loading speed and performance.

- Tree shaking is enabled by adding "sideEffects": false in package.json, and can also be configured to bypass specific files. Enabling Tree shaking mainly focuses on whether there are "side effects" in the project code. If there are, the "side effect" code being mistakenly removed will cause related problems. But regular projects generally don't have so many side effects.

- A piece of side effect code I encountered before was in the code I took over, where a JavaScript script was referenced and executed through import 'xxx', causing the related code to be removed after enabling Tree shaking, resulting in a white screen on the page.

// edges/index.tsx import { Graph, Path } from '@antv/x6' import { GraphEdge, GRID_SIZE } from '../../constants' Graph.registerEdge(xxxx) Graph.registerConnector(xxx) // index.tsx import './components/edges'Packaging and Compression

- In umi

- cssMinifier, you can choose to use esbuild / cssnano / parcelCSS to compress CSS, default is esbuild.

- jsMinifier, you can use esbuild / terser / swc / uglifyJs to compress JavaScript, default is esbuild.

- HTML compression, different packaging systems also have different plugins to compress HTML, but the benefit of this is relatively low.

- webpack

- You can compress JavaScript code by configuring TerserPlugin.

- rollup

- You can use rollup-plugin-terser to compress JavaScript code.

- Other engineering systems

- Generally, there are corresponding built-in compression plugins.

- In umi

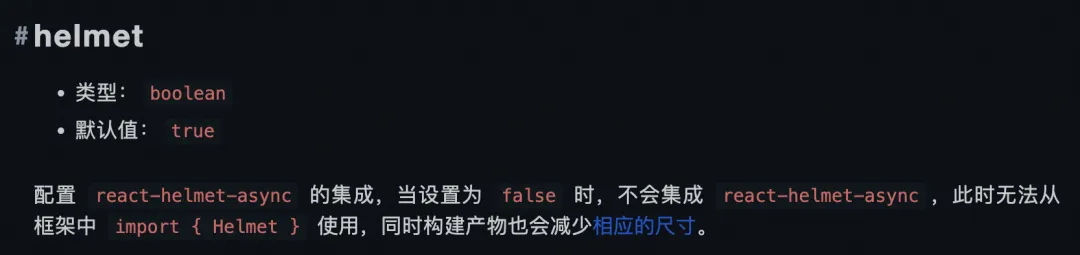

Turn off unnecessary plugins in umi4

- Umi4 enables many unnecessary plugins by default, which can be turned off as needed.

- antd: false, if antd is transformed to load with umd+externals, we generally don't need this, just implement on-demand.

- locale: false, configure as needed.

- helmet: false, if you've never heard of this, just turn it off directly.

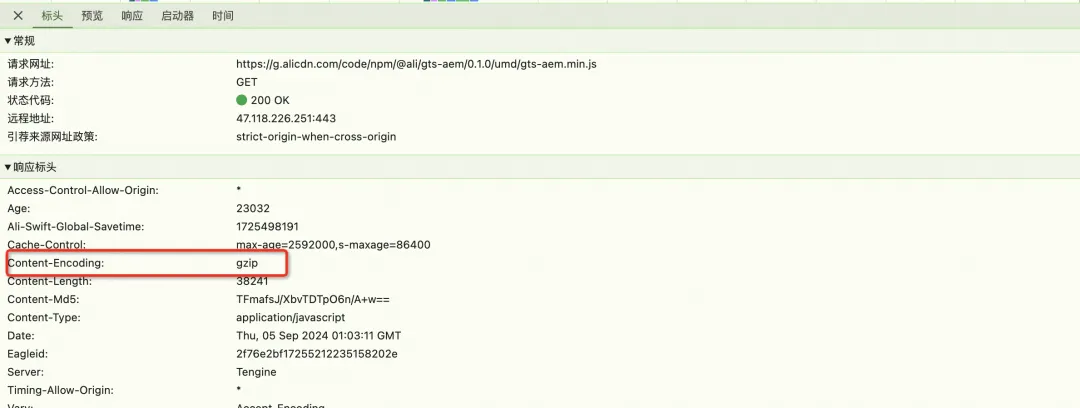

2.1.2.2 Compression During Network Transmission

2.1.2.3 Cache Static Resources and Critical Interfaces

First, let's clarify the caching objects: front-end static resources (such as js/css/image, etc.) and interfaces that do not have high real-time requirements but block subsequent rendering in CRP (such as fixed user information/menu data, etc.). The caching solutions we currently commonly involve include: CDN edge node caching, HTTP caching, service-worker caching, and web storage APIs (localstorage/sessionstorage/indexdb). We generally don't use Web Storage APIs to cache static resources because their space is limited.

- CDN Edge Node Caching

- CDN (Content Delivery Network) edge node caching refers to content stored on various edge nodes (also known as cache servers) in the CDN network to improve user access speed and reduce the burden on the source server. Simply put, it's about bringing resources closer to the physical location of end users, caching them at the nearest CDN node to the user.

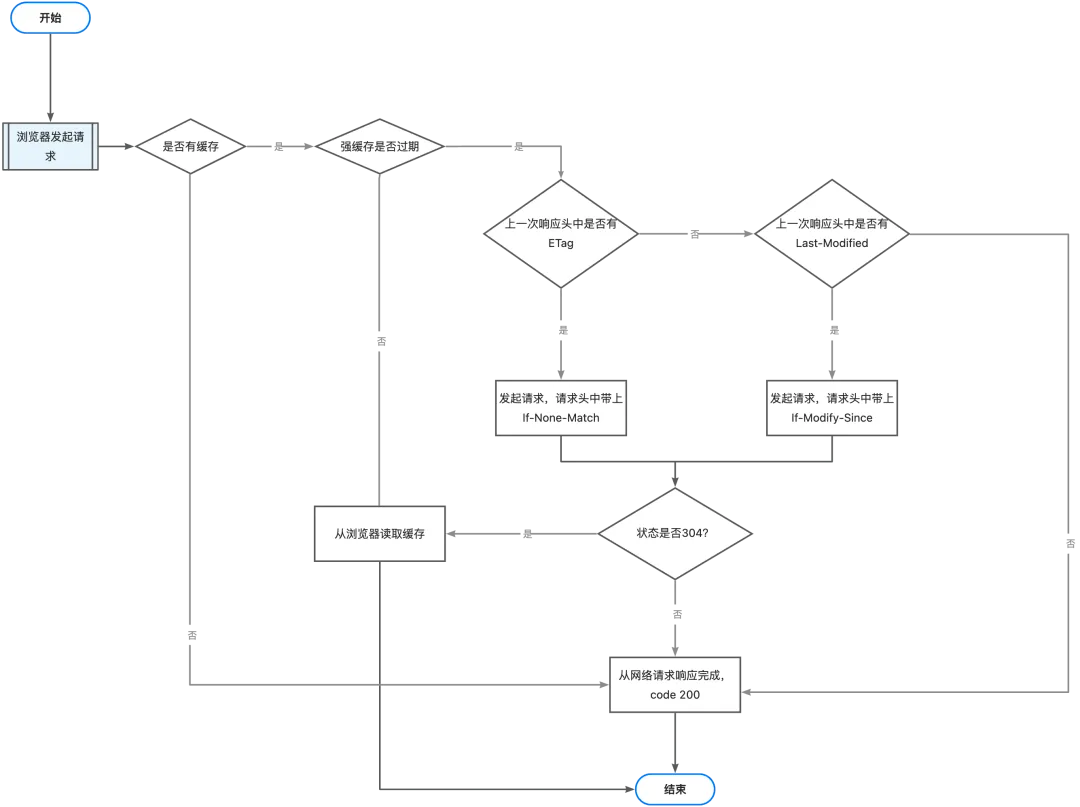

- HTTP Caching

- HTTP caching is divided into strong caching and negotiation caching. The HTTP caching process is as follows.

- How to determine if strong caching has expired?

- Expires

- Expires is a header field introduced in HTTP/1.0, used to specify a specific date and time when the response is considered expired. Its value is a string in HTTP date format (absolute time).

- Cache-Control

- Cache-Control is a header field introduced in HTTP/1.1 that can more precisely control caching strategies. It can contain multiple directives, separated by commas.

- public : Indicates that the response can be stored by any cache.

- private : Indicates that the response can only be stored by a single user's cache. For example, the CDN mentioned above cannot cache it.

- no-cache : Forces the cache to consult the backend (i.e., request again) before using the cache.

- no-store : Does not allow the cache to store any information about the client request or server response.

- max-age=

<seconds>: Specifies the maximum caching time for the response in seconds. After this time, the cache is considered expired. - must-revalidate : Indicates that the cache must revalidate before using expired data.

- Expires

Service Worker Caching

- Service workers are the core technology of PWA, serving as proxies between web browsers and web servers. They aim to improve reliability by providing offline access functionality while enhancing web page performance.

- Service workers are mainly targeted at weak network or offline scenarios. In our web performance optimization scenarios, especially in middle and back-end scenarios, they don't seem to have much advantage over HTTP caching.

- After research, we plan to use service workers with the Stale While Revalidate caching strategy to cache the HTML of the first visit and interfaces that don't require high real-time performance but block subsequent processes in CRP. The reasons are as follows.

- We usually inject a lot of additional interface query data into the first-screen HTML, such as user information, resulting in 150-300ms access time for the first-screen HTML. After this 150-300ms, subsequent request and rendering processes will start. This caching strategy can improve the overall site access speed by 150-300ms (depending on the HTML request time).

- The same applies to interfaces that don't require high real-time performance but block subsequent processes in CRP. As for why we don't try to cache these interfaces using localStorage, etc.? For example, our navigation and loader are introduced using the UMD approach, and if we were to transform each one, the cost would be quite high. Through service workers, we can achieve global interception, similar to filters and interceptors.

- Why adopt the Stale While Revalidate caching strategy? If we hard-control HTML for 10 minutes, if there's a bug in one of our releases, we'd be in trouble.

Web Storage API

The Web Storage API provides several mechanisms for storing data on the client side, allowing web applications to persist data like desktop applications. We generally use the Web Storage API to cache some data interfaces with low real-time requirements (such as dictionaries) to improve page performance.

localStorage

- Data stored using localStorage is persistent, meaning it will continue to exist unless explicitly deleted by the user or script. Storage space is typically 5MB or more.

sessionStorage

- Unlike localStorage, data in sessionStorage only exists during a browser session. When the browser window is closed, the stored data is cleared. The storage capacity of sessionStorage is often the same as localStorage, but may be less in some implementations.

indexedDB

- IndexedDB is a client-side NoSQL database technology that allows web applications to store large amounts of structured data in the user's browser. Compared to localStorage and sessionStorage, IndexedDB provides more powerful data storage capabilities, supporting transaction processing, indexing, and complex query capabilities.

- Generally, the storage space of indexedDB is 250MB or more, and can be further expanded if the user agrees.

2.1.3 Optimize Resource Request Order

2.1.3.1 Load Critical Resources as Early as Possible

dns-prefetch, DNS Prefetching

- DNS prefetching allows the browser to start DNS queries early in page loading. This is especially useful for cross-origin resources as it can reduce the delay caused by DNS resolution. Usage is as follows:

<link rel="dns-prefetch" href="https://example.com" />

preconnect, Domain Pre-connection

- Pre-connection allows the browser to establish connections with third-party resource servers as early as possible. This includes DNS queries, TCP handshakes, and TLS negotiation. Through pre-connection, the browser can prepare network connections before actually requesting resources, thus accelerating resource download. Usage is as follows:

<link rel="preconnect" href="https://fonts.gstatic.com" />

- If the resource requires an HTTPS connection, you can add the crossorigin attribute:

<link rel="preconnect" href="https://fonts.gstatic.com" crossorigin />

- preload, Increase Resource Loading Priority

- Preloading allows developers to inform the browser to load certain resources immediately, even if these resources are not immediately needed. This is typically used for resources critical to user experience, such as key CSS or JS files. Through preloading, the browser can load these resources before the user actually needs them, thus reducing delay. The as attribute specifies the type of resource to be preloaded. Usage is as follows:

<link rel="preload" href="/style.css" as="style" />

<link rel="preload" href="/script.js" as="script" />

- prefetch, Preload Resources Used on Other Pages

- Prefetching allows the browser to download resources that may be needed in the future during idle time. This is typically used for resources of the next page that users might visit, such as linked pages. Prefetching is a technique that starts downloading resources before the user has requested them, which helps reduce delay for future requests. Usage is as follows:

<link rel="prefetch" href="/next-page.html" /> <link rel="prefetch" href="/image.jpg" as="image" />

- Note that overuse of preload and prefetch may lead to unnecessary bandwidth consumption, so careful selection of which resources to preload or prefetch is necessary. The same applies to preconnect and dns-prefetch; use them reasonably as needed.

2.1.3.2 Delay Loading & Execution of Non-Critical Resources

async

- For regular scripts, if the async attribute exists, the regular script will be requested in parallel and parsed and executed as soon as possible.

- For module scripts, if the async attribute exists, the script and all its dependencies will be requested in parallel and parsed and executed as soon as possible.

defer

- The purpose of the defer attribute is to tell the browser to execute the script after the HTML document has been parsed but before the page is loaded. This ensures that the script does not block page rendering and that all elements are available in the DOM. defer will block the triggering of the DOMContentLoaded event.

Some notes about async and defer

- These two attributes will make the corresponding JS execution not block the main thread, but its loading is still in normal order.

- Execution is just delayed, but it will still execute on the main thread.

- After setting async, its execution order is uncertain; if there are requirements for execution order, defer can be used.

On-demand Loading

- For common dependencies, our team developed a umi plugin -- umi-plugin-runtime-import[1] (umi-plugin-runtime-import-v4[2], for umi4). For example, if we implement the above UMD + externals loading scheme transformation, a large amount of UMD pre-loading will make the first screen loading unbearable. If using the async scheme, there might be situations where the page code has executed but the UMD hasn't finished executing, leading to a white screen. The runtimeImport plugin can implement on-demand import for these UMDs, only loading when the dependency corresponding to this UMD is used in the page. This solves the first screen loading problem brought by the UMD + externals transformation.

- For page-level code, umi comes with dynamicImport to implement package splitting by page and on-demand loading mechanism.

- Although these two schemes are umi's plugin mechanism, they both use webpack-related capabilities at the bottom layer. Other engineering systems can also refer to implement their own schemes.

2.2 Page Rendering Phase

2.2.0 Optimization Approach

The optimization of the resource request phase is to make the resources for page rendering ready as quickly as possible, and then perform page rendering. In the page rendering phase, let's review the page rendering CRP.

- The browser parses the HTML, builds the DOM tree based on HTML and JS scripts, builds the CSSOM tree based on CSS, and synthesizes the render tree;

- Calculate node layout information combining the render tree and screen resolution and other related information;

- The browser renders the page based on the render tree and layout information;

- Finally, different layers are merged into the final image, appearing on the page;

First, we definitely can't do without SSR, the time-honored optimization scheme. Secondly, we currently focus on several performance impact factors in common web scenarios in CRP: JS code, CSS code, HTML code, image loading, and animations. The focus may be different in different business scenarios, and others such as WebGL, video encoding and decoding, and document editors will not be expanded upon.

2.2.1 Server-Side Rendering (SSR)

- SSR (Server-Side Rendering) is a web page rendering technology that generates complete HTML pages on the server and then sends them to the client (browser). Compared to client-side rendering (CSR), with SSR, when a user requests a page, the server handles all rendering logic and returns the generated page directly to the user.

- Advantages: Faster first screen loading time; SEO friendly; Better performance, reducing some client-side burden.

- Disadvantages: Requires server resources, and the server burden is heavy in high traffic scenarios. It may not be easy to promote SSR within non-C-end scenario teams; Development is more complex, especially when handling data fetching and state management; There is a delay in interaction, that is, SSR pages need additional JS to achieve dynamic interaction when first loaded.

- Summary: Actual use still needs to consider scenarios and ROI. Relative to the benefits brought by SSR, whether the cost of enabling SSR is acceptable.

2.2.2 JS Code Performance Optimization

2.2.2.1 DOM Optimization

Reduce DOM Operations

- DOM operations are usually performance bottlenecks because each modification to the DOM leads to the browser recalculating styles, layout, and repainting. Reducing DOM operations can significantly improve performance.

Batch DOM Changes

- Batch updating refers to combining multiple DOM operations into one operation to reduce the number of reflows and repaints. This can usually be achieved using DocumentFragment and innerHTML approaches.

Simplify HTML Code

- Simplifying HTML code refers to reducing unnecessary DOM nodes and complex structures to improve performance. This includes reducing nesting and removing unnecessary elements.

Use Virtual DOM

- In modern front-end frameworks, we actually rarely directly operate on the DOM, but instead use front-end frameworks like React and Vue that adopt virtual DOM technology. Virtual DOM maintains a virtual DOM tree in memory, reducing direct operations on the real DOM, thereby improving performance and response speed.

- Due to the characteristics of virtual DOM itself (new and old tree Diff), it will cause some performance issues in the following scenarios: frequent state updates, overly large component trees, etc. However, there are mature solutions to these problems now.

Event Delegation

- Event delegation is an efficient event handling technique, mainly used to reduce the number of event handlers, improve performance, and simplify code. Its basic principle is to add event handlers to parent elements instead of each child element.

- For example, if a card contains 100 numbers rendered in a loop, and we want to listen for clicks on these numbers. One way is to add a listener to each number, which would require adding 100 listeners. Another way is to directly add one listener to the card, and in combination with the event bubbling mechanism, determine which number was clicked through event.target, and then execute the corresponding operation.

Timely Removal of Unnecessary Event Listeners

- No need to elaborate further, just clean up when no longer needed to reduce memory usage and avoid unexpected behaviors.

2.2.2.2 Function Task Optimization

Web Worker

- Web Worker is a mechanism for running JavaScript in browsers that allows developers to execute scripts in background threads, thereby achieving multi-threaded processing. Its main purpose is to improve web page performance, especially when handling large computations or I/O operations, avoiding blocking the main thread (UI thread). For details, refer to MDN Web Workers API[3].

WebGPU

- WebGPU is a new Web API designed to provide high-performance graphics and computing capabilities for web pages. It allows developers to utilize GPU (Graphics Processing Unit) for parallel computing, thereby accelerating graphics rendering and data processing. For details, refer to MDN WebGPU API[4].

Long Task Handling

- In JavaScript, long tasks refer to operations that take a relatively long time to complete, such as complex calculations, large data processing, or network requests. If these long tasks are executed on the main thread, they will cause the user interface to freeze, affecting user experience.

- Common handling strategies for long tasks are as follows:

- Break long tasks into multiple small tasks and use setTimeout or setInterval to execute them in batches. This allows the browser to have a chance to handle other events (such as user input, rendering, etc.).

- Move long tasks to Web Workers for execution. Web Workers run in background threads and won't block the main thread, suitable for handling complex calculations or large amounts of data.

- For long tasks that need to frequently update the UI, you can use requestAnimationFrame to decompose the task. This allows the task to be executed within the browser's repaint cycle, ensuring smooth animation effects.

Throttling

- Throttling is a technique that limits a function to be executed only once within a certain time. It is typically used to handle high-frequency events, ensuring that the target function is executed only once within the specified time interval.

- Common usage scenarios: scroll event handling, window resize events (used in combination with debouncing), timed data updates (such as API requests), etc.

Debouncing

- Debouncing is a technique that ensures a function is called only once within a certain time. It is typically used to handle user input events, ensuring that an operation is executed only after the user stops inputting.

- Common usage scenarios: real-time search suggestions for input boxes, window resize events, preventing repeated clicks on form submission buttons, etc.

2.2.2.3 React Specifics

React 18 introduced two hooks, useTransition and useDeferredValue, for managing rendering performance, especially in concurrent mode.

React 19 plans to introduce "automatic memoization", that is: no need to manually write useMemo, useCallback, memo for performance optimization. Through a brand new compiler, React 19 can automatically detect component state changes and intelligently decide whether to re-render.

hooks

useCallback / useMemo

- useCallback / useMemo are both Hooks designed to optimize performance. They can help us avoid unnecessary calculations or function creations when components re-render.

- Generally, useMemo is used to cache values, and useCallback is used to cache functions. No need to elaborate further. For details, please refer to the documentation: useMemo[5], useCallback[6].

useTransition (React 18)

- Allows users to mark some state updates as non-urgent updates, allowing these updates to be executed during user interaction pauses. This can avoid UI stuttering caused by large amounts of data updates, improving application responsiveness.

useDeferredValue (React 18)

- Similar to useTransition, but it is specifically used to delay changes in certain values until the current work is completed. This is typically used for situations that require immediate response, such as scrolling a long list, where you want to update certain values after the scrolling ends.

memo

- memo is a Higher-Order Component (HOC) used to optimize the performance of pure function components. Its main function is to avoid unnecessary re-renders when the component's props haven't changed, thereby improving application performance.

Fragment

- Fragment allows users to group lists of child elements without adding extra DOM nodes to the final rendered HTML structure.

- It can be used to simplify DOM structure and reduce DOM nesting.

Optimize List Rendering (Key)

- React can use key to optimize list rendering. The main function of key is to help React identify which elements have been added, removed, or changed, enabling React to update the DOM more efficiently. Proper use of the key attribute can improve performance and reduce memory consumption when React renders large lists.

- Additionally, Key can also be used in non-list scenarios, such as dynamic components, conditional rendering, HOCs, etc.

Lazy Loading

- React lazy loading is an optimization technique used to load components on-demand, rather than loading all components at the beginning. This method can significantly improve application startup speed and performance, especially for large applications or applications with many components.

- In React, lazy loading is generally implemented using a combination of lazy and Suspense. For component files that use lazy, the packaging tool will automatically split them into separate code blocks and load them on-demand when needed.

Virtual Scrolling

- Virtual scrolling is an optimization technique used to efficiently render lists with large amounts of data. When there are many elements in a list, if all elements are rendered at once, it will cause performance issues, especially on mobile devices. Virtual scrolling solves this problem by only rendering elements currently in the viewport, greatly reducing memory usage and improving rendering performance.

- Virtual scrolling is a general technical solution, not limited to React. Generally, for relevant applicable scenarios, it can be directly integrated through third-party packages. I usually directly use ahooks' useVirtual, and most related components in antd also support the virtual option, which can be used directly.

Use Appropriate State Management

- Appropriate state management solutions can help us better control the timing and scope of state updates, thereby reducing unnecessary re-renders.

- For example, using state management libraries like Redux or MobX, we can more finely control which components need to re-render. This is especially true for large or complex React applications.

SWR

- SWR is a JavaScript library for optimizing data fetching in React applications, developed and maintained by Vercel (formerly Zeit). The name SWR comes from its main feature: "Stale While Revalidate", which is a caching strategy aimed at improving user experience and reducing latency.

- useRequest[7] in ahooks also supports SWR, but looking at its code[8], it's cached in memory. This way, the cached data will be lost after refreshing the page, and it can't achieve the effect of getting data at the fastest speed when I close the browser and revisit. To solve this problem, we implemented it ourselves and cached the data in indexedDB.

qiankun Related

prefetch

- More core sub-applications can be prefetched. We have a page with extremely high PV that's not the site homepage, and its code isn't even with the site homepage. This sub-application where the page is located was integrated midway due to business factors, is referenced elsewhere, is still being continuously iterated, and can't be directly moved to our main application to reduce the intermediate loading process.

- In this scenario, I tried to prefetch the sub-application where this page is located. Through comparison of AEM performance data, there was some benefit.

Optimize entry js file size

- There are two considerations for optimizing entry js size. First, as mentioned above, to make resource requests faster. Second, when loading sub-applications, qiankun parses the entry js, and this parsing process is also time-consuming. Generally, the larger the entry js size, the more time-consuming it is, and each regeneration of the sub-application has to go through a parsing process. Resource requests can be optimized through caching, but this parsing time is currently difficult for us to optimize (two links about qiankun optimizing entry js parsing time are attached below, if you're interested), so optimizing entry js size will also have some benefits.

- [RFC] qiankun's ultimate performance optimization idea, related to import-html-entry.[9]

- Changed 3 characters, 10 times sandbox performance improvement?!![10]

Regarding optimizing entry js size, I've had two recent practices.

- The first was integrating a temporarily taken over sub-application. Due to historical background and long-term neglect, the umi js of this sub-application was as high as 6MB+ (after gzip compression). I embedded part of this sub-application's pages in a drawer, and every time the user first opened the drawer, they had to experience 5-6S of spinning time (qiankun parsing the sub-application's umi.js time). I did two optimizations: first, reduced the umi.js size from 6MB+ to 1.8MB, shortening the spinning time to 2-3S; second, reduced the umi.js size from 1.8MB to 834KB, currently shortening the spinning time to less than 1S.

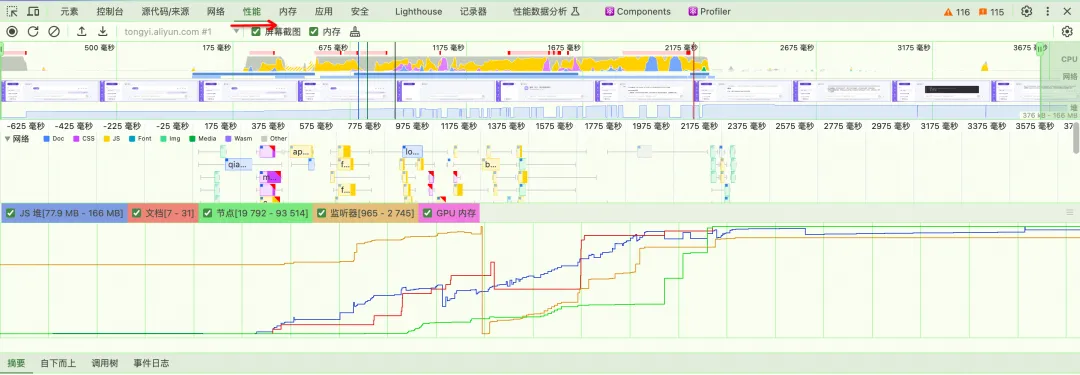

- The second was a series of optimizations for the site I'm responsible for, reducing all sub-applications' umi.js sizes by 120KB - 200KB, and the site's FCP decreased by 200ms+. See 2.1.2.1 Reduce Build Product Size - FCP 75th Percentile Chart for details.

2.2.3 CSS Code Performance Optimization

CSS Modularization

- Through mature community CSS Modules solutions, CSS can be modularized to achieve on-demand loading effects, only loading required content.

CSS Sprite

- CSS Sprite[11] is a method often mentioned in interviews, but I haven't practiced it yet. Sprites put multiple small images (such as icons) we want to use on the site into a single image file, then use different background-position[12] values to display parts of the image in different positions. This can greatly reduce the number of HTTP requests required to fetch images.

- However, in HTTP/2 and HTTP/3, due to the introduction of new features such as multiplexing, the advantages of sprites are not as obvious as in the HTTP/1.x era.

CSS Containment

- A method to implement on-demand rendering using CSS. CSS Containment can instruct the browser to isolate different parts of the page and optimize their rendering independently. This can improve performance when rendering various parts. For example, you can specify that the browser should not render certain containers before they are visible in the viewport. Specific uses include: contain, content-visibility.

- contain allows developers to precisely specify the Containment type to be applied to various containers on the page. This allows the browser to recalculate layout, style, paint, size, or any combination of them for part of the DOM.

- content-visibility allows developers to apply a set of powerful limitations to a group of containers and specify that the browser should not layout and render these containers before needed.

Optimize CSS Writing

- Delete unnecessary styles. All scripts will be parsed, whether they are used in layout and painting or not, so deleting unused styles can speed up webpage rendering. Currently, there seems to be no low-cost solution to remove unused CSS from packaged products, so we need to develop good habits when coding.

- Don't apply styles to elements that don't need them. For example, applying styles to all elements with a universal selector will negatively impact performance, especially on larger sites.

- Simplify selectors. Redundant selectors not only increase file size but also increase parsing time.

2.2.4 HTML Code Performance Optimization

- Responsive Handling of Alternative Elements

- Using CSS media query techniques to provide different sized images based on device size. This improves performance. For example, mobile devices only need to download images suitable for their screens, without downloading larger desktop images.

- To provide different resolution versions of the same image based on device resolution and viewport size, we can use the srcset and sizes attributes. srcset provides the intrinsic dimensions of the source images and their filenames, while sizes provides media queries and the image slot width to be filled in each case. Then, the browser decides which images to load based on each slot.

<img srcset="480w.jpg 480w, 800w.jpg 800w" sizes="(max-width: 600px) 480px, 800px" src="800w.jpg" />

- Provide Different Sources for Images and Videos

- picture is based on img and allows us to provide multiple different sources for different screen sizes. For example, if the layout is wide, we might want a wide image; if it's narrow, we'd want a narrower image that's still effective in that context. The media attribute of source contains media queries. If the media query returns true, the image referenced by the source's srcset attribute is loaded.

<picture>

<source media="(max-width: 799px)" srcset="narrow-banner-480w.jpg" />

<source media="(min-width: 800px)" srcset="wide-banner-800w.jpg" />

<img src="large-banner-800w.jpg" alt="Dense forest scene" />

</picture>

- video is similar to picture, but there are some differences in usage. In video, source files use src instead of srcset; additionally, there's a type attribute to specify the video format, and the browser will load the first format it supports (media query test returns true).

<video controls>

<source src="video/smaller.mp4" type="video/mp4" />

<source src="video/smaller.webm" type="video/webm" />

<source src="video/larger.mp4" type="video/mp4" media="(min-width: 800px)" />

<source src="video/larger.webm" type="video/webm" media="(min-width: 800px)" />

<!-- fallback for browsers that don't support video element -->

<a href="video/larger.mp4">download video</a>

</video>

- iframe Lazy Loading

- By setting the loading="lazy" attribute on iframes, we can instruct the browser to lazy load iframe content that's initially off-screen.

2.2.5 Image Loading Performance Optimization

Appropriate Scaling, Cropping, and Compression

- When we receive images from designers, if the display requirements aren't too high, we can use relevant platforms or tools to process the images (such as scaling, cropping, compression, etc.) to reduce image size.

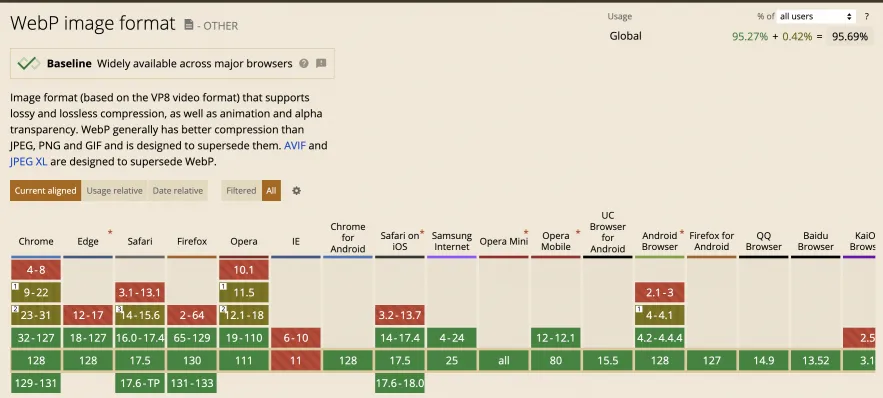

Suitable Image Formats

- WebP format images have superior algorithms compared to PNG/JPG, resulting in smaller file sizes than JPG/PNG, thus loading faster and consuming less bandwidth. The image below shows WebP compatibility.

Responsive Loading

- Refer to 2.2.4 HTML Code Performance Optimization - Providing Different Sources for Images and Videos. The essence is to load images of different sizes for different screen sizes, loading on-demand based on actual situations, without wasting bandwidth.

load="lazy"

- The loading="lazy" attribute value indicates that the image should be loaded using lazy loading, meaning it only starts loading when the image is about to enter the viewport. Similar to CSS Containment, applying content-visibility to images can also achieve a similar lazy loading effect.

- The difference between the two is that after setting load="lazy", the network request for the image resource won't be sent directly when parsing the img tag, but only when it's close to the viewport; while content-visibility only controls rendering and is unrelated to the network request for the image resource.

fetch-priority="high"

- The fetch-priority attribute allows developers to explicitly specify the loading priority of certain resources. When we want certain critical resources (such as important images or stylesheets) to load as quickly as possible, we can use fetch-priority="high".

decoding="async"

- decoding="async" can instruct the browser to decode images asynchronously, thereby improving page rendering performance. This attribute is particularly suitable for images that don't need to be displayed immediately, such as images at the bottom of the page or lazy-loaded images.

2.2.6 Animation Performance Optimization

Animation Usage Principles

- Remove any unnecessary animations, simplify animations as much as possible, or lazy load animations. Especially in middle and back-end scenarios, avoid overuse.

Animation Implementation Principles

- Try to process animations on the GPU

- Use 3D transform animations, such as transform: translateZ() and rotate3d().

- Elements with certain other animation properties, such as position: fixed.

- Elements that have applied will-change.

- Specific elements that render in their own layer, including

<video>,<canvas>, and<iframe>.

Prioritize CSS Implementation

- Avoid using the following properties as much as possible

- Modifying element dimensions, such as width, height, border, and padding.

- Repositioning elements, such as margin, top, bottom, left, and right.

- Changing element layout, such as align-content, align-items, and flex.

- Adding visual effects that change element geometry, such as box-shadow.

Prioritize using the following properties to reduce reflow

- opacity

- filter

- transform

Secondarily, implement using Web Animations API

- The Web Animations API provides many advanced features to help developers create more efficient and smoother animation effects. Such as fine-grained control, keyframe management, animation composition, etc.

Can be combined with requestAnimationFrame

- requestAnimationFrame ensures that the callback function is called before the browser's next repaint, ensuring synchronization between animation frames, meaning animations will be synchronized with the browser's refresh rate as much as possible, providing smoother visual effects. At the same time, the browser intelligently schedules requestAnimationFrame callbacks, automatically pausing animations when the page is not visible (e.g., minimized window or switched to another tab), thus saving computational resources.

III. Common Analysis Tools

You can research the usage through the internet on your own.

3.1 Chrome's Built-in Analysis Tools

3.1.1 Performance Panel

- Used for analyzing and testing webpage performance.

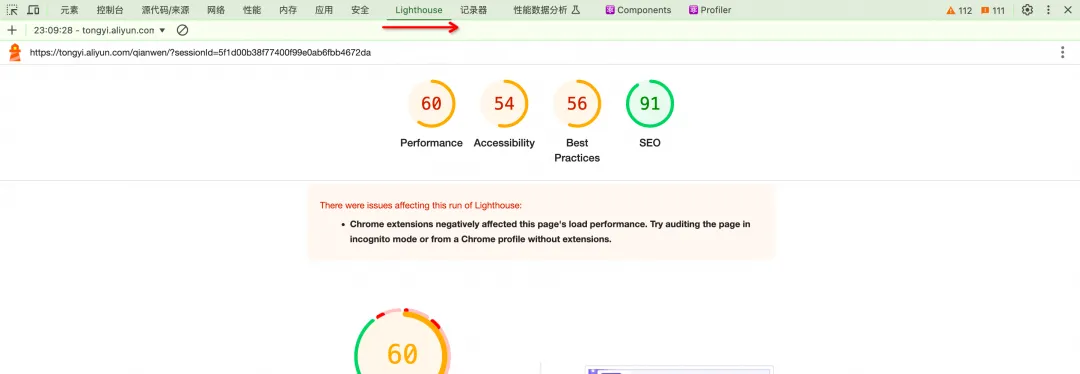

3.1.2 Lighthouse Panel

- Used for analyzing and testing webpage performance.

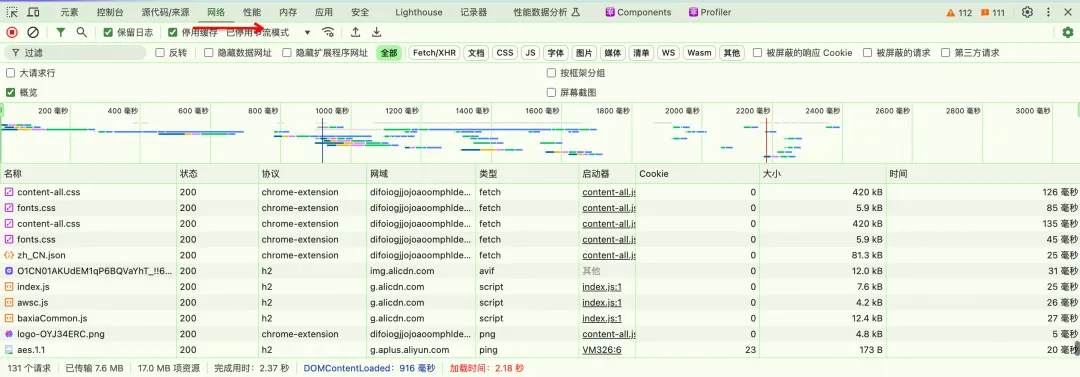

3.1.3 Network Panel

- Used for viewing webpage network resource loading conditions.

3.1.4 Performance Data Analysis Panel (Soon to be removed)

- Used for analyzing and testing webpage performance, but will be deprecated soon.

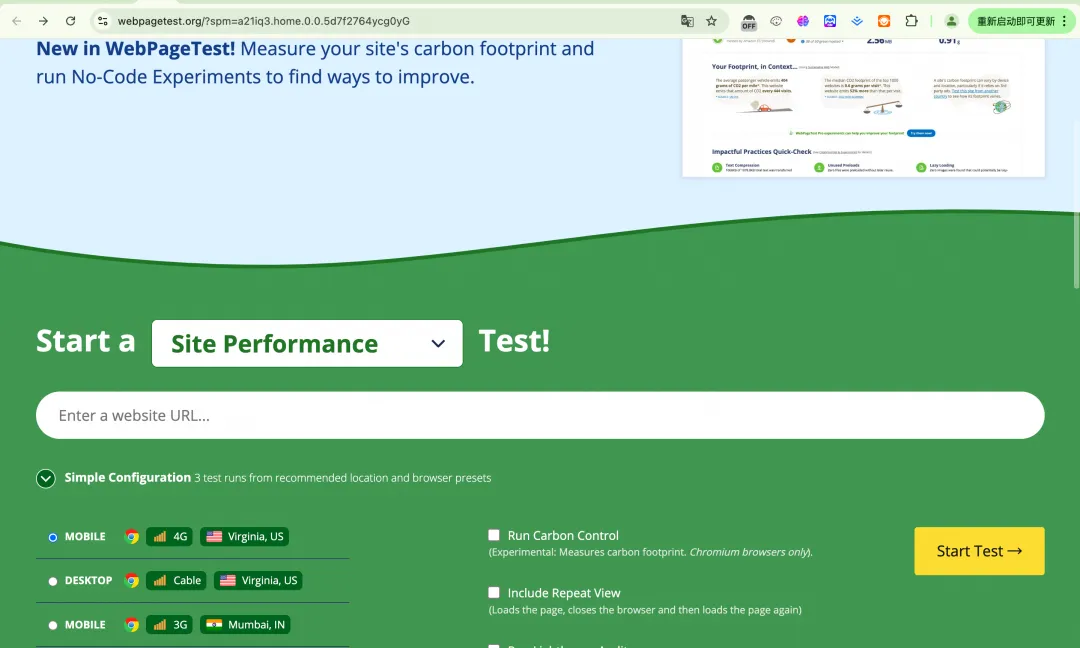

3.2 Third-party Evaluation Sites

Used for analyzing and testing webpage performance.

3.2.1 PageSpeed

3.2.2 WebPageTest

3.3 Webpack Analysis Plugins

- Webpack Bundle Analyzer

- A tool for analyzing Webpack build output. It helps developers better understand the composition of the build product (bundle), including the size distribution of various modules, libraries, and resource files.

- Source Map Explorer

- A tool for analyzing JavaScript source code size and its proportion in the packaged file (such as the bundle file after Webpack packaging).

- umi, analyze=1

- umi's built-in solution for analyzing product composition volume distribution.

3.4 VSCode Plugin

- Bundle Size

- A plugin for real-time viewing of Webpack build product size.

IV. Conclusion

This article introduces some common performance optimization strategies and performance analysis tools used for debugging optimization effects. In the actual performance optimization process, we can analyze our target project to be optimized, then adopt suitable strategies to solve specific problems, and finally use performance analysis tools to verify the optimization effect. Front-end technology is continuously developing, and performance optimization strategies will also keep pace with the times. We can boldly try and carefully verify.

The road ahead is long; I see no ending, yet high and low I'll search with my will unbending.

Reference Links:

[1]https://www.npmjs.com/package/umi-plugin-runtime-import

[2]https://www.npmjs.com/package/umi-plugin-runtime-import-v4

[3]https://developer.mozilla.org/en-US/docs/Web/API/Web_Workers_API/Using_web_workers

[4]https://developer.mozilla.org/en-US/docs/Web/API/WebGPU_API

[5]https://zh-hans.react.dev/reference/react/useMemo

[6]https://zh-hans.react.dev/reference/react/useCallback

[7]https://ahooks.js.org/zh-CN/hooks/use-request/cache#swr

[8]https://github.com/alibaba/hooks/blob/master/packages/hooks/src/useRequest/src/utils/cache.ts

[9]https://github.com/umijs/qiankun/issues/1555

[10]https://www.yuque.com/kuitos/gky7yw/gs4okg

[11]https://css-tricks.com/css-sprites/

[12]https://developer.mozilla.org/zh-CN/docs/Web/CSS/background-position